Running Red Hat OpenShift on a Tiny Form Factor: My Hardware Picks and Setup Guide

In this blog post, I share my journey of running Red Hat OpenShift on a teeny-tiny form factor. From hand-picking cost-efficient hardware to executing successful configuration steps. Get ready to explore the possibilities of running full OpenShift on the smallest device yet!

OpenShift has at least three flavors designed for different on-premises use cases; OpenShift, OpenShift Local, and MicroShift. These have specific hardware requirements, which I have listed below. This information will help you select the right OpenShift version for your needs.

╔═════════════════╦═════════════════╦════════╦═════════╗

║ ║ Cores ║ RAM ║ Storage ║

╠═════════════════╬═════════════════╬════════╬═════════╣

║ OpenShift ║ 8 vCPUs ║ 16GB ║ 120GB ║

║ OpenShift Local ║ 4 physical CPUs ║ 9GB ║ 35GB ║

║ MicroShift ║ 2 CPUs ║ 2GB ║ 10GB ║

╚═════════════════╩═════════════════╩════════╩═════════╝Hardware

To make sure I could access all the features of OpenShift, I needed a machine with 8 virtual CPUs, or a server that had a processor with 4 cores and 8 threads to run OpenShift on a single node (SNO). After doing some research, I stumbled upon a renewed M910 Tiny on Amazon that had an Intel® Core™ i7–6700T processor, which met the requirements for just $280.

Creating an OpenShift cluster

I have installed Kubernetes manually several times (Kubernetes the hard way), so I was prepared for lengthy instructions. Luckily, OpenShift has made this process much simpler. Although I encountered a few issues, I will elaborate on later, the installation process went smoothly, offering a seamless user experience.

For my homelab setup, I use a Ubiquiti gateway to connect to the internet, and I rely on AdGuard Home for DNS.

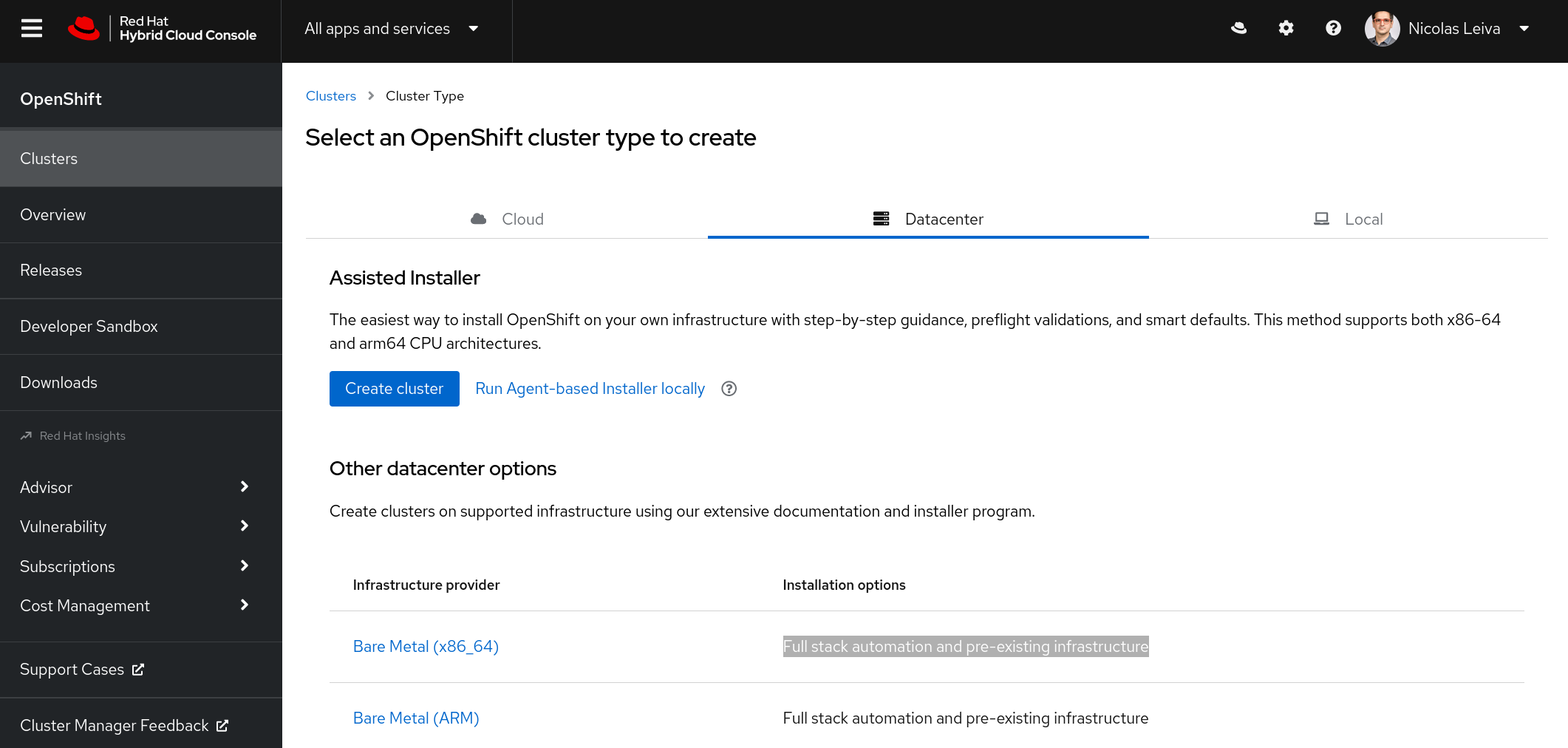

To start the process, go to https://console.redhat.com/openshift/create and select Datacenter > Bare Metal > Interactive (installation method). If you don’t have a Red Hat account, you can create one for free and run an OpenShift trial.

Next, name your cluster and select Install single-node OpenShift (SNO):

I named my cluster in and used lab.home for the base domain.

The next section on the interactive installer (Operators) lets you add OpenShift Virtualization and Logical Volume Manager Storage to the cluster. Do not select them if you only have 8 vCPUs like me, as OpenShift Virtualization adds 6 vCPU to the requirements. Logical Volume Manager Storage adds 1 vCPU.

The next step is to generate an ISO file to boot any node you’d like to add to the cluster. There’s only one node in this case.

Click on Generate Discovery ISO, and off you go. The file size is approximately 1.1 GB.

DNS

Before booting this ISO on the server, you need to add a couple of DNS entries, as noted in the Requirements for installing OpenShift on a single node.

- Kubernetes API:

api.<cluster_name>.<base_domain> - Ingress route:

*.apps.<cluster_name>.<base_domain> - Internal API:

api-int.<cluster_name>.<base_domain>

This configuration translates to the following, where 10.64.0.63 is the IP address I statically allocate via DHCP to the server:

With DNS out of the way, I can now run the generated ISO on the server.

Boot from the ISO

You need a USB stick that the server can boot from. I use Fedora Media Writer to make a USB stick bootable with an ISO file.

To tell the server to boot from the USB stick, press F12 after powering on the computer to reach the boot menu. Then select USB and let it run.

This server has WiFi, so I must disable it to avoid kernel panics. In the boot menu, I selected Enter Setup. In Network Setup, I changed Wireless LAN to Disabled. Your system may have similar considerations.

After that, you can safely boot from the USB stick. The host will eventually show up as ready to install in the assisted installer interface:

Now it’s time to select the cluster’s internal networks. I used the defaults (10.128.0.0/14, 172.30.0.0/16), and I picked dual-stack support to leverage IPv6 (fd01::/48, fd02::/112). It looks like this:

After this, the installer takes care of all the rest. You no longer need to look at the server screen.

Networking

If the hosts in your home network want to access any of the services you deploy in OpenShift, they will need to know how to reach the cluster’s internal networks you just configured (10.128.0.0/14, 172.30.0.0/16, fd01::/48, and fd02::/112). There are ways to do this dynamically, but for this example, I can simply create static routes in the Gateway pointing to the server (10.64.0.63 and 2600:4040…):

Install the OpenShift command-line interface (CLI)

You can check if everything is working from your computer with the OpenShift CLI. You can download this utility from the OpenShift console.

But first, from the cluster view, download your kubeconfig by clicking on the Download kubeconfig button. Copy the password to access the console (user kubeadmin).

Click the Open Console button, or go to the URL https://console-openshift-console.apps.<cluster_name>.<base_domain>. In this example, it is https://console-openshift-console.apps.in.lab.home/.

From the web console, click ? to see the link to Command line tools or go to https://console-openshift-console.apps.<cluster_name>.<base_domain>/command-line-tools.

Move the binary you downloaded to a location on your computer that is in your PATH. In this example, I used:

$ mv ~/Downloads/oc-4.12.2-linux/* ~/binTo authenticate with the Kubernetes API, you must move the kubeconfig to ~/.kube/config:

$ mv ~/Downloads/kubeconfig ~/.kube/configEnjoy OpenShift

At this point, you should be able to contact the cluster from your computer:

$ oc cluster-info

Kubernetes control plane is running at https://api.in.lab.home:6443You can check the node’s status with the oc get nodes command:

$ oc get nodes

NAME STATUS ROLES AGE VERSION

host3.lab.home Ready control-plane,master,worker 152m v1.25.4+a34b9e9For more commands, check the CLI reference.

Conclusions

The installation process has evolved significantly, give it a try to run OpenShift even a $300-dollar server .

Thanks to Chris Keller and Juan Jose Floristan for their support in setting up my single-node cluster. I would still be banging my head against the wall if it wasn’t for them.

This was originally published on Enable Sysadmin, which might be discontinued.